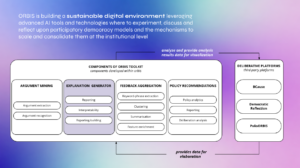

Beyond the “Black Box”: ORBIS Redefines Explainable AI (XAI) at Recent Workshop

Last week, the ORBIS project reached a significant milestone in the dialogue surrounding technological transparency and deliberative democracy. Ilaria Mariani, PhD and Assistant Professor at the Department of Design, Politecnico di Milano, led a comprehensive 90-minute session titled “AI and Explainability: The ORBIS Project Case.”

The session showcased how ORBIS is moving beyond the “classical” model-centric view of AI to develop a human-centered, stakeholder-specific approach to explainability.

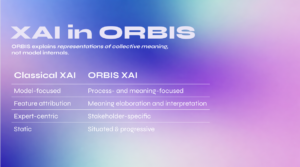

A New Epistemology for XAI

During the workshop, Ilaria Mariani detailed how ORBIS is challenging traditional industry standards. While “Classical XAI” often focuses on technical feature attribution and expert-centric static models, the ORBIS XAI framework is built on different pillars:

-

Focus on Collective Meaning: Instead of merely explaining model internals (the “how” of the code), ORBIS explains the representations of collective meaning.

-

Co-designed Epistemology: XAI is treated as a foundational way of creating and sharing knowledge, rather than a final “UX decoration” or aesthetic add-on.

-

Distributed Intelligence: Explainability is understood as a distributed feature across all project components and platforms, ensuring a seamless flow of information.

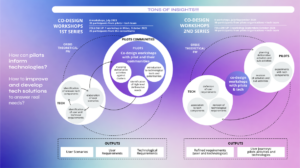

Shaping Technology through Co-Creation

A central theme of the presentation was the impact of the ORBIS Co-Design Workshops. These sessions, which brought together pilot communities and technical teams, were instrumental in shaping the practical application of XAI.

Through these collaborative processes, the project successfully defined:

-

WHAT constitutes a meaningful and useful explanation for a non-expert.

-

WHEN explanations should be triggered within the deliberative workflow to be most effective.

-

TO WHOM information is directed, tailoring the output for participants, facilitators, or policymakers.

-

HOW the information is delivered—whether through textual, visual, comparative, or temporal formats.

The session highlighted that for AI to truly augment participation and trust in democracy, it must be “situated and progressive”—growing and adapting alongside the users it serves.