Technology

Leveraging AI to transform public engagement and support informed, inclusive democratic decisions.

The power of AI

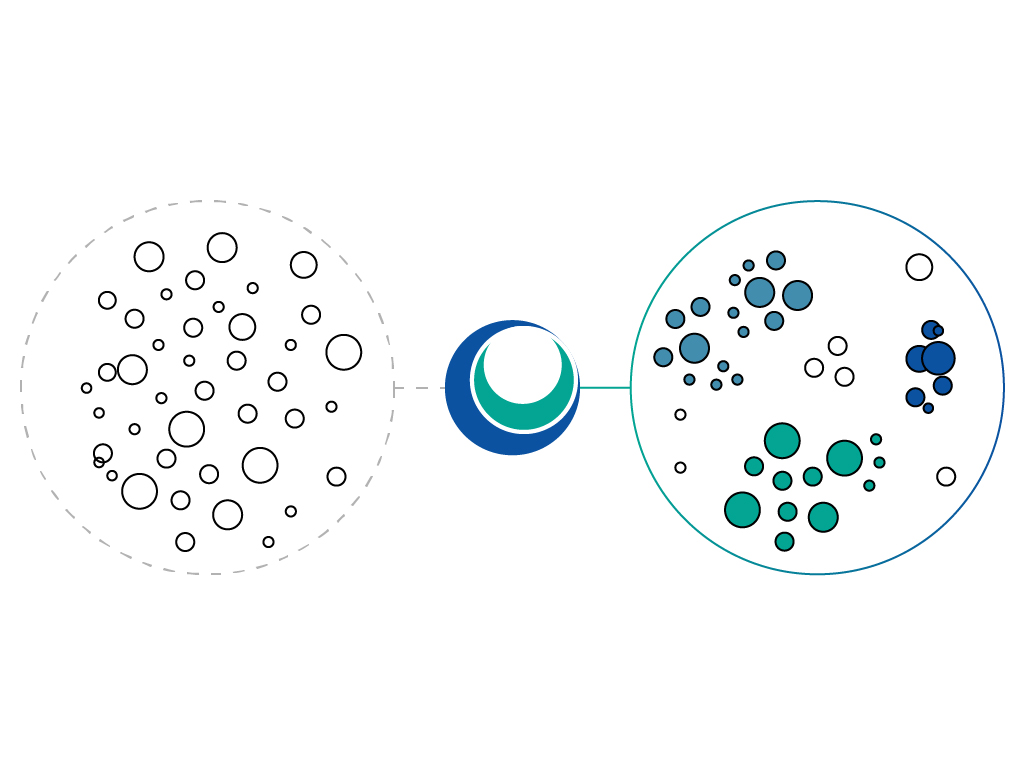

ORBIS harnesses the power of AI to support deliberative democracy, making public engagement more accessible, comprehensible, and impactful. ORBIS has developed and implemented a suite of AI-enhanced tools aimed at advancing and scaling deliberative democracy.

These tools are crafted to improve the accessibility, comprehensibility, and quality of deliberative processes, thus widening participation and deepening understanding of public opinions and policy debates. Relying upon advanced data analysis techniques—natural language processing, and algorithms—the ORBIS toolkit transforms extensive public input into more understandable contents and actionable insights, promoting more inclusive and effective democratic deliberations.

Timelines and argument trees

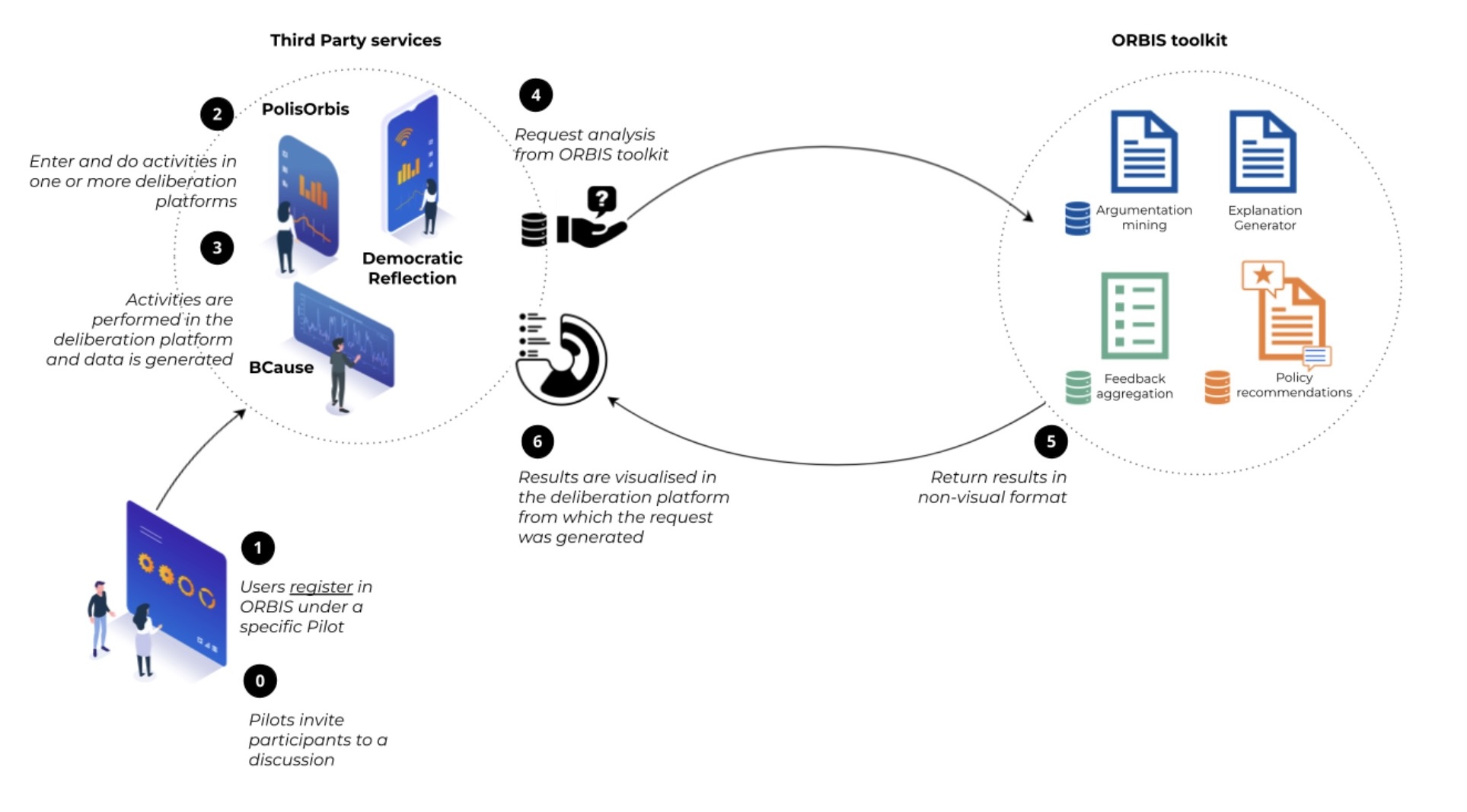

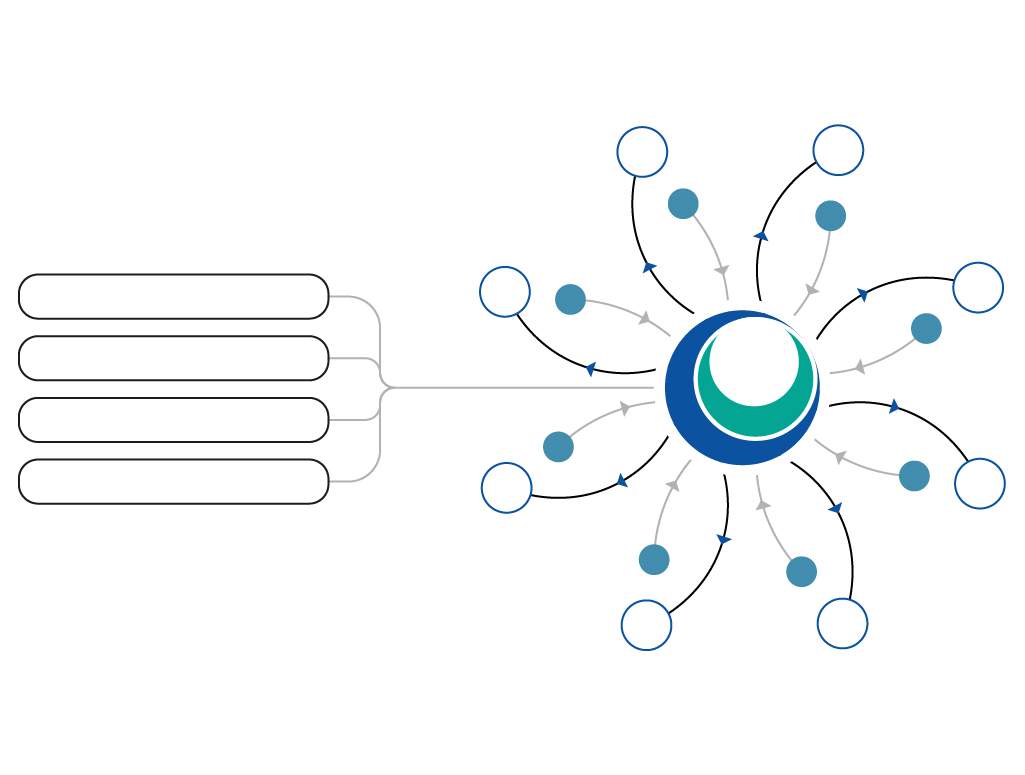

This toolkit is integrated within various project-specific deliberative platforms: BCause, PolisOrbis, and Democratic Reflection, each designed to meet unique deliberative requirements. BCause structures debates into supportive and opposing views, displaying these through timelines and argument trees, and it synthesises discussions by highlighting relevant contributions. PolisOrbis, leveraging the open-source Pol.is framework, facilitates large-scale discussions by employing AI to instantly identify and display emerging patterns of consensus. Democratic Reflection enhances participant engagement by incorporating interactive prompts alongside discussions, which guide reflective thinking and offer personalised feedback post-engagement.

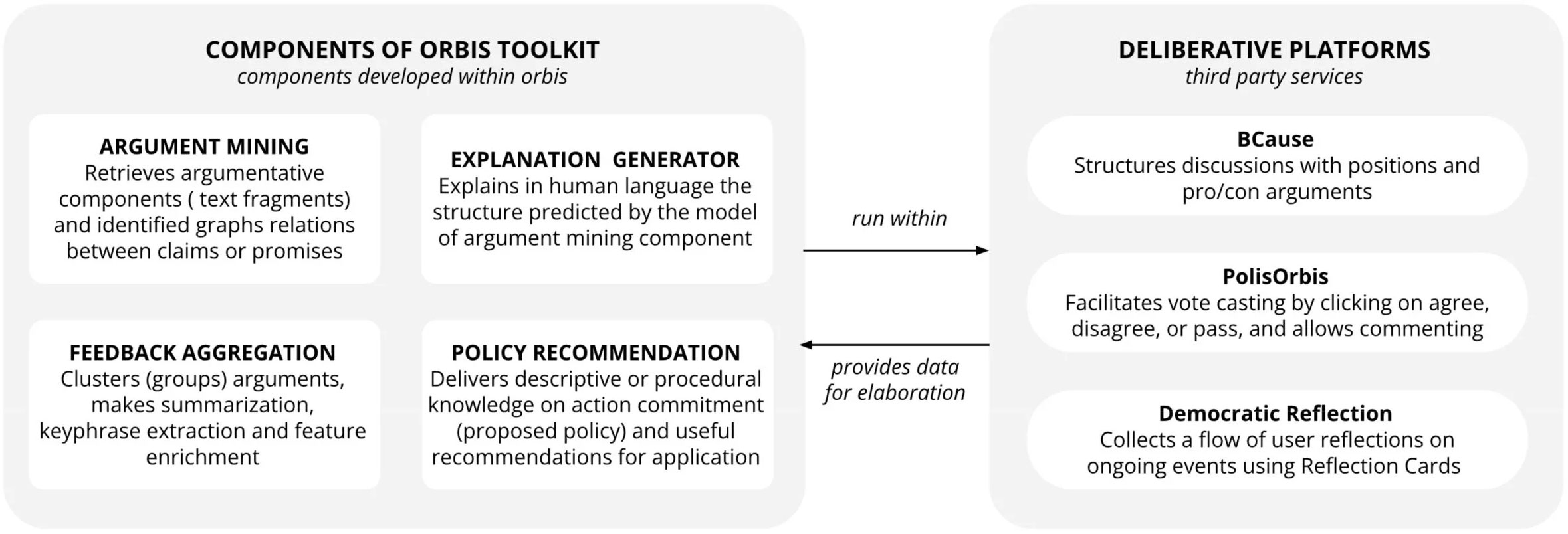

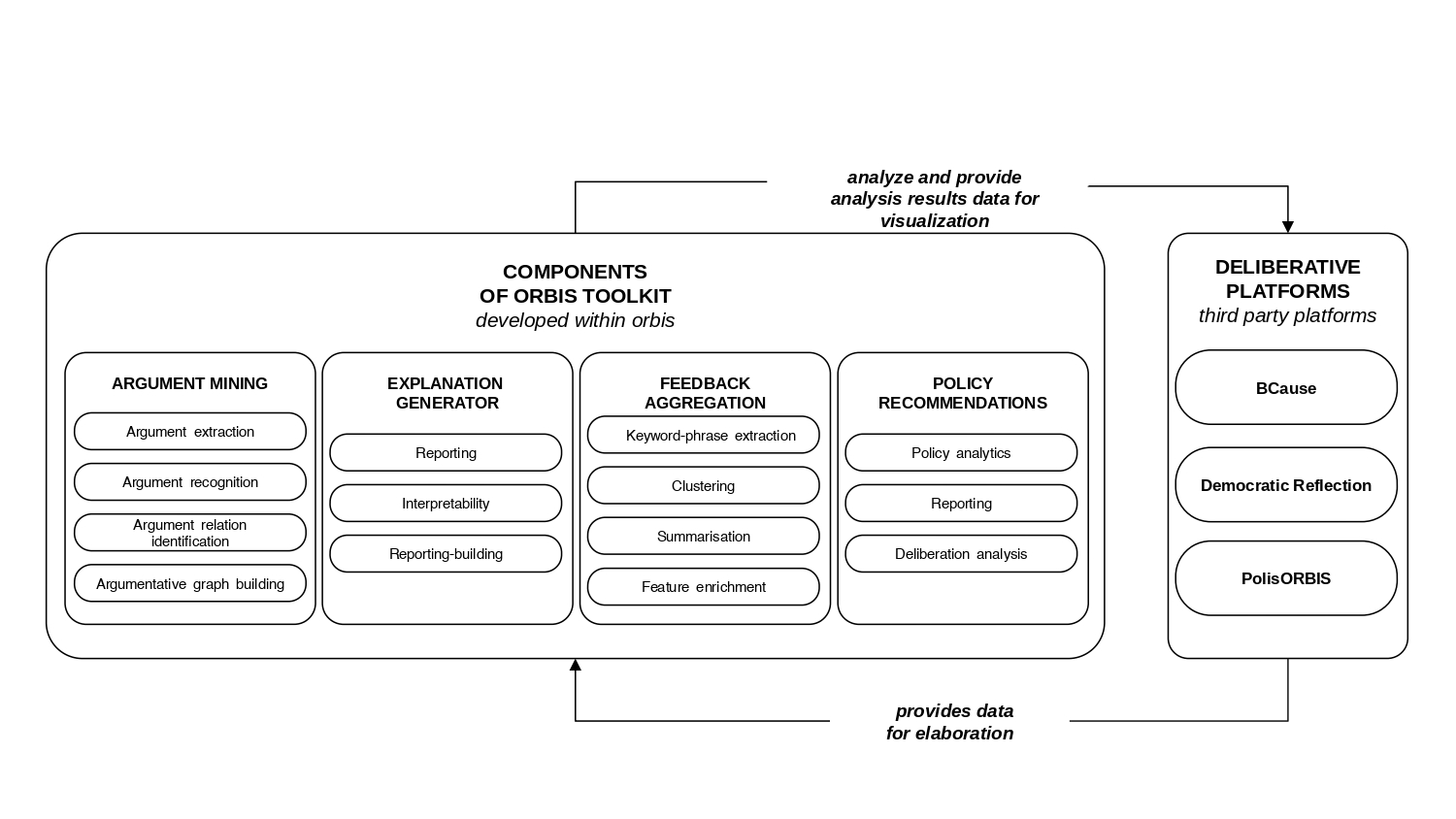

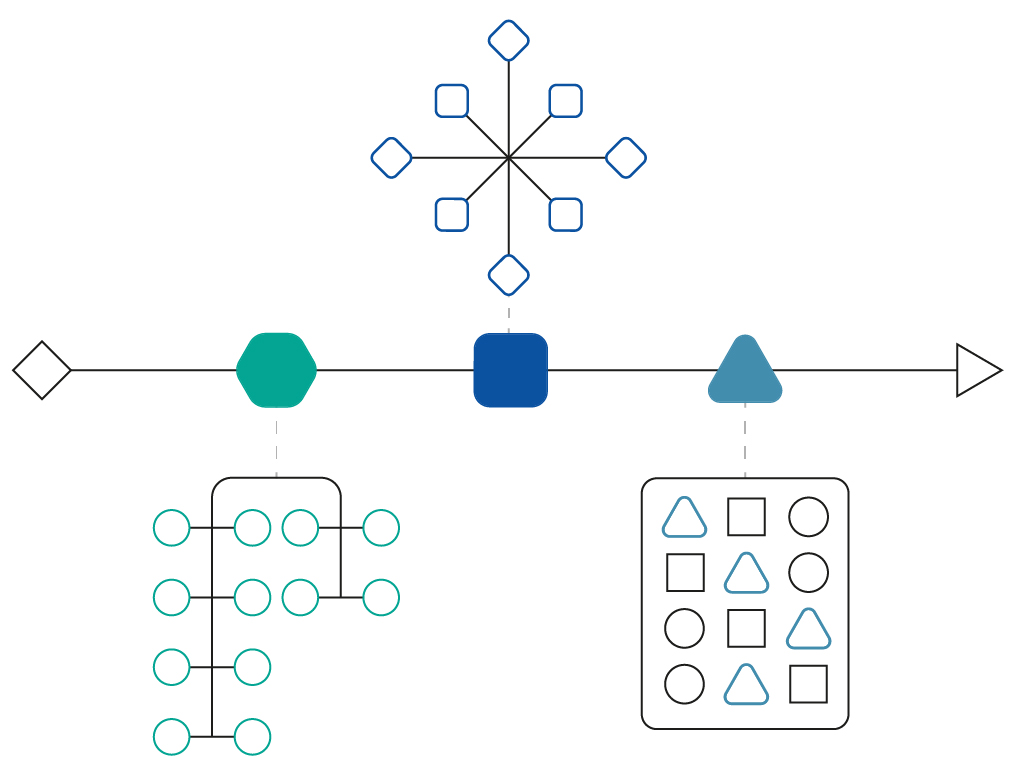

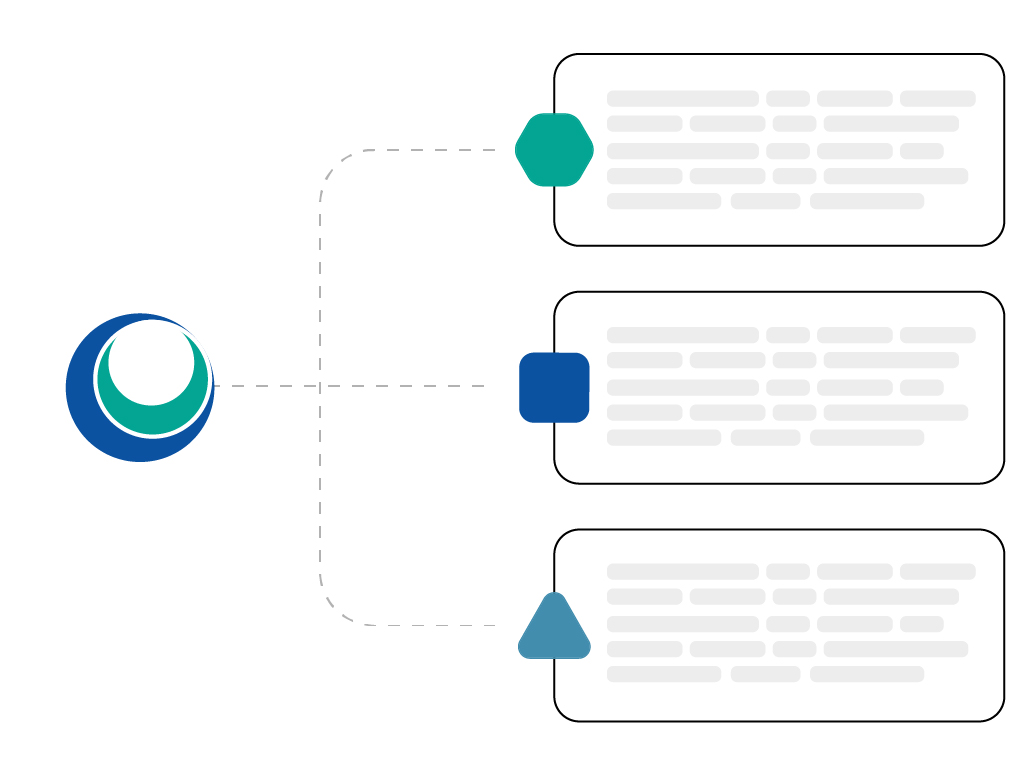

AI components in the ORBIS toolkit,

their description, and interaction

with third-party deliberative platforms:

The ORBIS Toolkit, tool by tool

Within the ORBIS toolkit, each tool addresses a unique aspect of deliberation enhancement

Argument Mining

Argument Mining (AM)focuses on automatically extracting and structuring argumentative components from texts, analysing the construction of arguments to map reasoning across various discourses such as debates, legal documents, or online discussions. In the classical AM pipeline, argumentative components are categorised into Premises (Evidences) and Claims (Positions, Opinions, etc.), with relationships defined as Support or Attack. These components and their relationships are organised into logical graph structures that reveal the underlying reasoning in public policy discussions, enhancing structural understanding by representing interactions between arguments.

Explanation Generator

Explanation Generator (EG) is a module designed to produce clear, human-readable explanations based on data processed by AI components. Its main goal is to improve understanding—both for citizens and institutions—of how deliberative conclusions are reached within ORBIS. By transparently detailing the reasoning steps, inference patterns, and logic applied by the AI, EG helps to clarify complex processes and enhance interpretability. This transparency is essential in fostering trust in AI-assisted decision-making, supporting accountability, and encouraging informed participation in democratic processes supported by the ORBIS system.

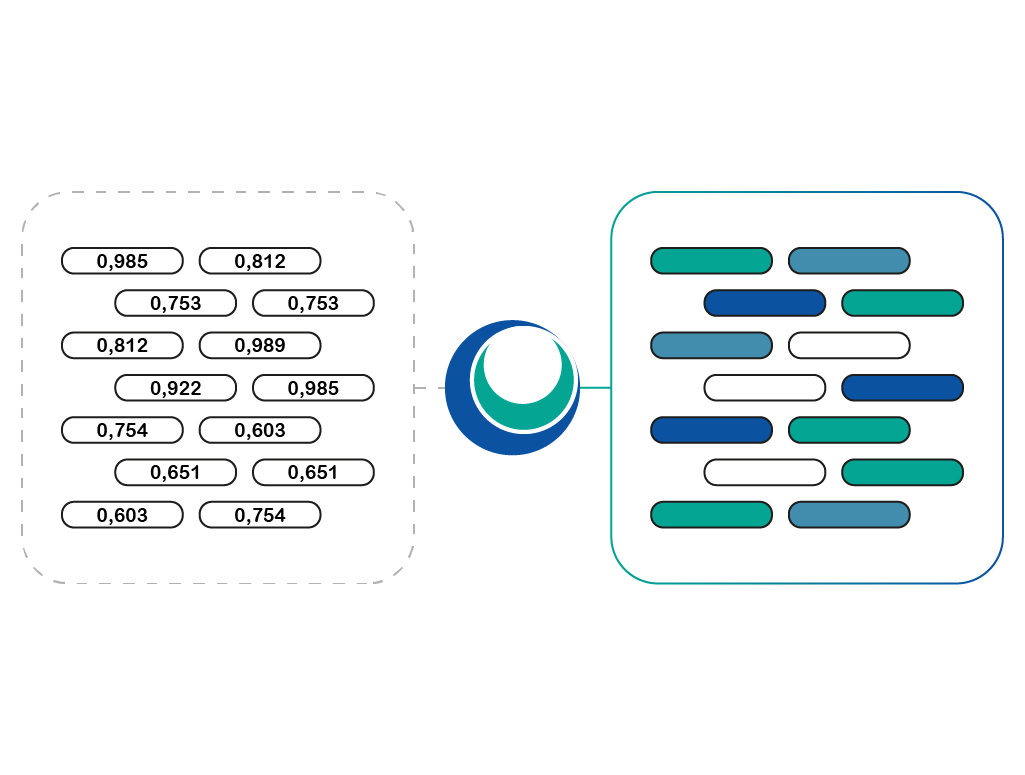

Feedback Aggregation

Feedback Aggregation (FA) is a component that collects, organizes and generates data from large volumes of input based on deliberative discussions. It identifies and clusters key ideas, extracts salient phrases, provides summaries and titles, as well as enriches the dataset with contextual and semantic features. This process enables the detection of recurring themes, prevailing sentiments, and areas of consensus or disagreement. By transforming dispersed and often complex feedback into concise and actionable insights, FA supports both citizens and institutions in grasping the overall direction of the debate, facilitating more informed and inclusive decision-making within the ORBIS framework.

Policy Recommendation

Policy Recommendation (PR) is a component that builds upon the insights generated by Feedback Aggregation (FA) to support the formulation of policies and collective decision-making. It refines the outputs of structured argument analysis and summarised feedback, transforming them into concrete, actionable policy suggestions. By aligning these recommendations with the preferences, concerns, and values expressed by participants throughout the deliberative process, PR ensures that proposed actions are both relevant and democratically grounded. This module plays a key role in translating citizen input into meaningful outcomes within the ORBIS decision-making ecosystem.

ORBIS has developed and implemented a suite of AI-enhanced tools aimed at advancing and scaling deliberative democracy. These tools are crafted to improve the accessibility, comprehensibility, and quality of deliberative processes, thus widening participation and deepening understanding of public opinions and policy debates.

Relying upon advanced data analysis techniques—natural language processing, and algorithms—the ORBIS toolkit transforms extensive public input into more understandable contents and actionable insights, promoting more inclusive and effective democratic deliberations.

This toolkit is integrated within various project-specific deliberative platforms: BCause, PolisOrbis, and Democratic Reflection, each designed to meet unique deliberative requirements.

BCause structures debates into supportive and opposing views, displaying these through timelines and argument trees, and it synthesises discussions by highlighting relevant contributions.

PolisOrbis, leveraging the open-source Pol.is framework, facilitates large-scale discussions by employing AI to instantly identify and display emerging patterns of consensus.

Democratic Reflection enhances participant engagement by incorporating interactive prompts alongside discussions, which guide reflective thinking and offer personalised feedback post-engagement.

Systematic co-creation process

The development of ORBIS’s technological components has evolved through a systematic co-creation process with six organisations that manage deliberative experiments in pilot environments. This staged co-design approach has facilitated the ongoing collection of data, consistently securing valuable insights and requirements.